In this article, we will briefly explore a feature called "Workload Identity Federation for GKE" that was recently announced by GKE in their official blog.

Features Overview¶

Workload Identity Federation for GKE is an improved version of the original GKE Workload Identity feature. The main improvement is that it needs less configuration and offers better user experience.

How to Use¶

Follow these steps to try this feature:

Create a GKE cluster with Workload Identity Federation for GKE enabled. You can find this option at: Create Cluster - Security - Enable Workload Identity.

Link IAM roles to the service account used by your test application. One service account can have multiple roles. For example:

$ kubectl create ns demo-ns $ kubectl -n demo-ns create serviceaccount demo-sa $ gcloud projects add-iam-policy-binding projects/test-gke-XXX \ --role=roles/container.clusterViewer \ --member=principal://iam.googleapis.com/projects/23182XXXXXXX/locations/global/workloadIdentityPools/test-gke-XXXXXX.svc.id.goog/subject/ns/<namespace-name>/sa/<service-account-name> \ --condition=None $ gcloud storage buckets add-iam-policy-binding gs://<bucket-name> \ --role=roles/storage.objectViewer \ --member=principal://iam.googleapis.com/projects/23182XXXXXXX/locations/global/workloadIdentityPools/test-gke-XXXXXX.svc.id.goog/subject/ns/<namespace-name>/sa/<service-account-name> \ --condition=NoneDeploy the test application. The application pod needs to use the namespace and service account from step 2, and add nodeSelector to ensure scheduling on nodes with the Workload Identity Federation for GKE feature enabled

apiVersion: v1 kind: Pod metadata: name: test-pod namespace: demo-ns spec: nodeSelector: iam.gke.io/gke-metadata-server-enabled: "true" serviceAccountName: demo-sa containers: - name: test-pod image: google/cloud-sdk:slim command: ["sleep","infinity"]After the pod is running, enter the test-pod container and access the instance metadata service to obtain the STS token. The official SDK used by actual business applications will use a similar method to access the instance metadata service to obtain STS tokens for requesting cloud product APIs.

$ gcloud auth print-access-token

ya29.d.XXX

$ curl -H "Metadata-Flavor: Google" http://metadata.google.internal/computeMetadata/v1/instance/service-accounts/default/token

{"access_token":"ya29.d.XXX","expires_in":3423,"token_type":"Bearer"}

- The obtained STS token will have all the permissions of the roles associated in step 2.

$ gcloud container node-pools list --zone us-central1 --cluster test

NAME MACHINE_TYPE DISK_SIZE_GB NODE_VERSION

default-pool e2-medium 100 1.30.6-gke.1125000

$ curl -X GET -H "Authorization: Bearer $(gcloud auth print-access-token)" \

"https://storage.googleapis.com/storage/v1/b/demo-gke-workload-identity-federation/o"

{

"kind": "storage#objects"

}

Workflow Explained¶

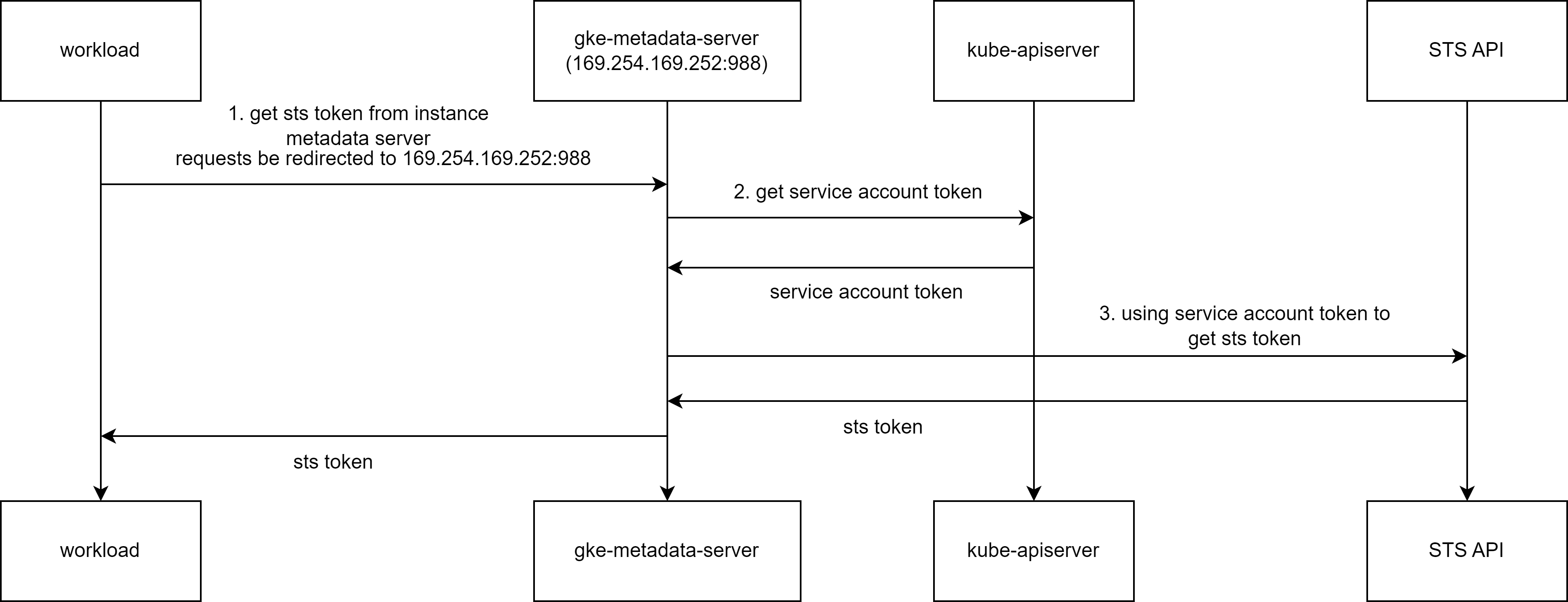

The workflow of Workload Identity Federation for GKE feature is as follows:

- When a program in the application Pod container requests the instance metadata service (accessing http://metadata.google.internal, which actually accesses http://169.254.169.254:80) to get an STS token, the request will be redirected to 169.254.169.252:988. 169.254.169.252:988 is the port listened to by the gke-metadata-server service deployed on the node.

- When the gke-metadata-server service receives the request, it will determine which Pod the request came from based on the client IP, then request the apiserver to generate a token corresponding to the service account used by that Pod.

- gke-metadata-server will use the obtained service account token to access GCP's STS API to get an STS token, finally gke-metadata-server returns the obtained STS token to the client.

There are several key components and information that need special attention in this workflow, which will be explained one by one below.

gke-metadata-server¶

When enabling the Workload Identity Federation for GKE feature at the cluster or node pool level, a component named gke-metadata-server will be automatically deployed in the cluster. The workload YAML for this component is as follows:

apiVersion: apps/v1

kind: DaemonSet

metadata:

annotations:

deprecated.daemonset.template.generation: "1"

labels:

addonmanager.kubernetes.io/mode: Reconcile

k8s-app: gke-metadata-server

name: gke-metadata-server

namespace: kube-system

spec:

minReadySeconds: 90

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: gke-metadata-server

template:

metadata:

annotations:

components.gke.io/component-name: gke-metadata-server

components.gke.io/component-version: 0.4.301

monitoring.gke.io/path: /metricz

creationTimestamp: null

labels:

addonmanager.kubernetes.io/mode: Reconcile

k8s-app: gke-metadata-server

spec:

containers:

- command:

- /gke-metadata-server

- --logtostderr

- --token-exchange-endpoint=https://securetoken.googleapis.com/v1/identitybindingtoken

- --workload-pool=test-gke-XXXXXX.svc.id.goog

- --alts-service-suffixes-using-node-identity=storage.googleapis.com,bigtable.googleapis.com,bigtable2.googleapis.com,bigtablerls.googleapis.com,spanner.googleapis.com,spanner2.googleapis.com,spanner-rls.googleapis.com,grpclb.directpath.google.internal,grpclb-dualstack.directpath.google.internal,staging-wrenchworks.sandbox.googleapis.com,preprod-spanner.sandbox.googleapis.com,wrenchworks-loadtest.googleapis.com,wrenchworks-nonprod.googleapis.com

- --identity-provider=https://container.googleapis.com/v1/projects/test-gke-XXXXXX/locations/us-central1/clusters/test

- --passthrough-ksa-list=anthos-identity-service:gke-oidc-envoy-sa,anthos-identity-service:gke-oidc-service-sa,gke-managed-dpv2-observability:hubble-relay,kube-system:antrea-controller,kube-system:container-watcher-pod-reader,kube-system:coredns,kube-system:egress-nat-controller,kube-system:event-exporter-sa,kube-system:fluentd-gcp-scaler,kube-system:gke-metrics-agent,kube-system:gke-spiffe-node-agent,kube-system:heapster,kube-system:konnectivity-agent,kube-system:kube-dns,kube-system:maintenance-handler,kube-system:metadata-agent,kube-system:network-metering-agent,kube-system:node-local-dns,kube-system:pkgextract-service,kube-system:pkgextract-cleanup-service,kube-system:securityprofile-controller,istio-system:istio-ingressgateway-service-account,istio-system:cluster-local-gateway-service-account,csm:csm-sync-agent,knative-serving:controller,kube-system:pdcsi-node-sa,kube-system:gcsfusecsi-node-sa,gmp-system:collector,gke-gmp-system:collector,gke-managed-cim:kube-state-metrics

- --attributes=cluster-name=test,cluster-uid=392f63049deXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX,cluster-location=us-central1

- --cluster-uid=392f63049ded410XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

- --sts-endpoint=https://sts.googleapis.com

- --token-exchange-mode=sts

- --cloud-monitoring-endpoint=monitoring.googleapis.com:443

- --iam-cred-service-endpoint=https://iamcredentials.googleapis.com

- --cluster-project-number=2318XXXXXXXX

- --cluster-location=us-central1

- --cluster-name=test

- --component-version=0.4.301

- --ksa-cache-mode=watchchecker

- --kcp-allow-watch-checker=true

- --enable-mds-csi-driver=true

- --csi-socket=/csi/csi.sock

- --volumes-db=/var/run/gkemds.gke.io/csi/volumes.boltdb

env:

- name: GOMEMLIMIT

value: "94371840"

image: us-central1-artifactregistry.gcr.io/gke-release/gke-release/gke-metadata-server:gke_metadata_server_20240702.00_p0@sha256:aea9cc887c91b9a05e5bb4bb604180772594a01f0828bbfacf30c77562ac7205

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

host: 127.0.0.1

path: /healthz

port: 989

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 3

name: gke-metadata-server

ports:

- containerPort: 987

name: alts

protocol: TCP

- containerPort: 988

name: metadata-server

protocol: TCP

- containerPort: 989

name: debug-port

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

host: 127.0.0.1

path: /healthz

port: 989

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

limits:

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

securityContext:

privileged: true

readOnlyRootFilesystem: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/lib/kubelet/kubeconfig

name: kubelet-credentials

readOnly: true

- mountPath: /var/lib/kubelet/pki/

name: kubelet-certs

readOnly: true

- mountPath: /var/run/

name: container-runtime-interface

- mountPath: /etc/srv/kubernetes/pki

name: kubelet-pki

readOnly: true

- mountPath: /etc/ssl/certs/

name: ca-certificates

readOnly: true

- mountPath: /home/kubernetes/bin/gke-exec-auth-plugin

name: gke-exec-auth-plugin

readOnly: true

- mountPath: /sys/firmware/efi/efivars/

name: efivars

readOnly: true

- mountPath: /dev/tpm0

name: vtpm

readOnly: true

- mountPath: /csi

name: csi-socket-dir

- mountPath: /var/run/gkemds.gke.io/csi

name: state-dir

- mountPath: /var/lib/kubelet/pods

mountPropagation: Bidirectional

name: pods-dir

- mountPath: /registration

name: kubelet-registration-dir

dnsPolicy: Default

hostNetwork: true

nodeSelector:

iam.gke.io/gke-metadata-server-enabled: "true"

kubernetes.io/os: linux

priorityClassName: system-node-critical

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: gke-metadata-server

serviceAccountName: gke-metadata-server

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

operator: Exists

- effect: NoSchedule

operator: Exists

- key: components.gke.io/gke-managed-components

operator: Exists

volumes:

- hostPath:

path: /var/lib/kubelet/pki/

type: Directory

name: kubelet-certs

- hostPath:

path: /var/lib/kubelet/kubeconfig

type: File

name: kubelet-credentials

- hostPath:

path: /var/run/

type: Directory

name: container-runtime-interface

- hostPath:

path: /etc/srv/kubernetes/pki/

type: Directory

name: kubelet-pki

- hostPath:

path: /etc/ssl/certs/

type: Directory

name: ca-certificates

- hostPath:

path: /home/kubernetes/bin/gke-exec-auth-plugin

type: File

name: gke-exec-auth-plugin

- hostPath:

path: /sys/firmware/efi/efivars/

type: Directory

name: efivars

- hostPath:

path: /dev/tpm0

type: CharDevice

name: vtpm

- hostPath:

path: /var/lib/kubelet/plugins/gkemds.gke.io

type: DirectoryOrCreate

name: csi-socket-dir

- hostPath:

path: /var/lib/kubelet/pods

type: Directory

name: pods-dir

- hostPath:

path: /var/lib/kubelet/plugins_registry

type: Directory

name: kubelet-registration-dir

- hostPath:

path: /var/lib/kubelet/plugins

type: Directory

name: kubelet-plugins-dir

- hostPath:

path: /var/run/gkemds.gke.io/csi

type: DirectoryOrCreate

name: state-dir

The gke-metadata-server component has the following characteristics:

The component's workload is a DaemonSet.

The component Pod uses hostNetwork: true and privileged: true.

The service inside the component will listen on ports 987, 988, and 989, where port 988 will be used to receive redirected requests for accessing the instance metadata service:

$ ss -atnlp |grep gke LISTEN 0 1024 *:987 *:* users:(("gke-metadata-se",pid=183706,fd=10)) LISTEN 0 1024 *:989 *:* users:(("gke-metadata-se",pid=183706,fd=12)) LISTEN 0 1024 *:988 *:* users:(("gke-metadata-se",pid=183706,fd=15))The previously mentioned IP 169.254.169.252 is the local loopback (lo) IP address, so the port 988 listened to by gke-metadata-server includes the previously mentioned 169.254.169.252:988 port:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet 169.254.169.252/32 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft foreverAll nodes with the Workload Identity Federation for GKE feature enabled will be configured with the following nftables rules during initialization, ensuring that traffic from business containers requesting the metadata service (http://metadata.google.internal, 169.254.169.254:80) will be redirected to the 169.254.169.252:988 port listened to by the component:

table ip nat { chain PREROUTING { type nat hook prerouting priority dstnat; policy accept; counter packets 2143 bytes 169432 jump KUBE-SERVICES iifname != "eth0" meta l4proto tcp ip daddr 169.254.169.254 tcp dport 8080 counter packets 0 bytes 0 dnat to 169.254.169.252:987 iifname != "eth0" meta l4proto tcp ip daddr 169.254.169.254 tcp dport 80 counter packets 181 bytes 10860 dnat to 169.254.169.252:988 } }As mentioned earlier, the component will determine the source pod through the client IP and request the apiserver to get the service account. This involves what credentials the component uses to access the apiserver. The component uses the kubelet's credentials on the node to access the apiserver (the component YAML shown earlier includes the configuration for mounting kubelet kubeconfig). Additionally, when deploying the component, extra RBAC permissions for get/list/watch on pods and serviceaccounts will be granted to the kubelet's credentials, used for obtaining information about pods and service accounts on the current node:

--- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: components.gke.io/component-name: gke-metadata-server components.gke.io/component-version: 0.4.301 labels: addonmanager.kubernetes.io/mode: Reconcile name: gce:gke-metadata-server-reader roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: gce:gke-metadata-server-reader subjects: - apiGroup: rbac.authorization.k8s.io kind: Group name: system:nodes --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: annotations: components.gke.io/component-name: gke-metadata-server components.gke.io/component-version: 0.4.301 labels: addonmanager.kubernetes.io/mode: Reconcile name: gce:gke-metadata-server-reader rules: - apiGroups: - "" resources: - pods - serviceaccounts verbs: - get - watch - listIf the service account used by the pod is not bound to any IAM role, the STS token obtained by the application in the pod when accessing the metadata service will be the STS token of the node's default Google service account.

service account token¶

For requests initiated by programs within each pod, the gke-metadata-server component doesn't directly use the service account token mounted in the pod container, but instead requests the apiserver to generate a new service account token.

Here's an example of the payload for a service account token mounted in the container:

{

"aud": [

"https://container.googleapis.com/v1/projects/test-gke-XXXXXX/locations/us-central1/clusters/test"

],

"exp": ...,

"iat": ...,

"iss": "https://container.googleapis.com/v1/projects/test-gke-XXXXXX/locations/us-central1/clusters/test",

"jti": "...",

"kubernetes.io": {...},

"nbf": ...,

"sub": "system:serviceaccount:demo-ns:demo-sa"

}

Here's an example of the payload for a service account token generated by the apiserver when requested by the component:

{

"aud": [

"test-gke-XXXXXX.svc.id.goog"

],

"exp": ...,

"iat": ...,

"iss": "https://container.googleapis.com/v1/projects/test-gke-XXXXXX/locations/us-central1/clusters/test",

"jti": "...",

"kubernetes.io": {...},

"nbf": ...,

"sub": "system:serviceaccount:demo-ns:demo-sa"

}

As we can see, the main difference is in the content of the aud field.

sts token¶

The gke-metadata-server component will use the obtained service account token to access the STS token API to get an STS token.

Here's an example of the corresponding request:

:authority: sts.googleapis.com

:method: POST

:path: /v1/token?alt=json&prettyPrint=false

:scheme: https

x-goog-api-client: gl-go/1.23.0--20240626-RC01 cl/646990413 +5a18e79687 X:fieldtrack,boringcrypto gdcl/0.177.0

user-agent: google-api-go-client/0.5 gke-metadata-server

content-type: application/json

content-length: 1673

accept-encoding: gzip

{"audience":"identitynamespace:test-gke-XXXXXX.svc.id.goog:https://container.googleapis.com/v1/projects/test-gke-XXXXXX/locations/us-central1/clusters/test","grantType":"urn:ietf:params:oauth:grant-type:token-exchange","requestedTokenType":"urn:ietf:params:oauth:token-type:access_token","scope":"https://www.googleapis.com/auth/cloud-platform https://www.googleapis.com/auth/userinfo.email","subjectToken":"XXX.XXX.XXX","subjectTokenType":"urn:ietf:params:oauth:token-type:jwt"}

:status: 200

content-type: application/json; charset=UTF-8

vary: Origin

vary: X-Origin

vary: Referer

content-encoding: gzip

date: ...

server: scaffolding on HTTPServer2

content-length: 1061

x-xss-protection: 0

x-frame-options: SAMEORIGIN

x-content-type-options: nosniff

{"access_token":"ya29.d.XXX","issued_token_type":"urn:ietf:params:oauth:token-type:access_token","token_type":"Bearer","expires_in":3599}

The formatted content of the request body is as follows:

{

"audience": "identitynamespace:test-gke-XXXXXX.svc.id.goog:https://container.googleapis.com/v1/projects/test-gke-XXXXXX/locations/us-central1/clusters/test",

"grantType": "urn:ietf:params:oauth:grant-type:token-exchange",

"requestedTokenType": "urn:ietf:params:oauth:token-type:access_token",

"scope": "https://www.googleapis.com/auth/cloud-platform https://www.googleapis.com/auth/userinfo.email",

"subjectToken": "XXX.XXX.XXX",

"subjectTokenType": "urn:ietf:params:oauth:token-type:jwt"

}

The formatted content of the response body is as follows:

{

"access_token": "ya29.d.XXX",

"issued_token_type": "urn:ietf:params:oauth:token-type:access_token",

"token_type": "Bearer",

"expires_in": 3599

}

Differences from GKE Workload Identity¶

Here are the differences between Workload Identity Federation for GKE and GKE Workload Identity feature (also known as linking Kubernetes ServiceAccounts to IAM):

| Comparison Item | GKE Workload Identity | Workload Identity Federation for GKE |

|---|---|---|

| Requires Google service account (GSA) | Required | Not Required |

| IAM role binding configuration | Requires GSA role binding | Requires k8s service account (KSA) role binding |

| KSA impersonating GSA configuration | Required | Not Required |

| GSA info configuration for KSA in cluster | Required | Not Required |

| Multiple KSA/GSA binding support | Not supported | Supported (e.g., all SAs in namespace, all SAs in cluster) |

| Authorization at project + namespace + KSA | Supported | Supported |

| Authorization at cluster + namespace + KSA | Not supported | Not supported |

| Support for hostNetwork applications | Not supported | Not supported |

| Depends on gke-metadata-server component | Required | Required |

| Cloud API support for obtained STS token | Almost all cloud product APIs | Most cloud APIs supported, some limited support, few unsupported |

By the way, although the official tutorials and documentation for Workload Identity Federation for GKE mention that it requires the gke-metadata-server component, from what we discussed earlier, we can see that: we can actually use this solution without installing the gke-metadata-server component. Specifically, we can remove the dependency on this component by mounting the required service account token to the application Pod and then directly accessing the Token API provided by STS within our applications.

References¶

- Make IAM for GKE easier to use with Workload Identity Federation | Google Cloud Blog

- Authenticate to Google Cloud APIs from GKE workloads | Google Kubernetes Engine (GKE)

- About Workload Identity Federation for GKE | Google Kubernetes Engine (GKE) | Google Cloud

- Identity federation: products and limitations | IAM Documentation | Google Cloud

- Method: token | IAM Documentation | Google Cloud

- About VM metadata | Compute Engine Documentation | Google Cloud

Comments