本文将简单探索一下前段时间 GKE 官宣的名为 Workload Identity Federation for GKE 的特性。

功能介绍¶

Workload Identity Federation for GKE 是原有的 GKE Workload Identity 特性的改进版本, 核心的改进是减少了需要配置的信息,提升了用户体验。

使用方法¶

可以通过下面几个步骤体验该特性:

创建一个启用 Workload Identity Federation for GKE 特性的 GKE 集群。具体启用位置是:创建集群 - 安全 - 启用 Workload Identity。

为测试应用使用的 service account 关联 iam 角色,一个 service account 可以关联多个角色。比如:

$ kubectl create ns demo-ns $ kubectl -n demo-ns create serviceaccount demo-sa $ gcloud projects add-iam-policy-binding projects/test-gke-XXX \ --role=roles/container.clusterViewer \ --member=principal://iam.googleapis.com/projects/23182XXXXXXX/locations/global/workloadIdentityPools/test-gke-XXXXXX.svc.id.goog/subject/ns/<namespace-name>/sa/<service-account-name> \ --condition=None $ gcloud storage buckets add-iam-policy-binding gs://<bucket-name> \ --role=roles/storage.objectViewer \ --member=principal://iam.googleapis.com/projects/23182XXXXXXX/locations/global/workloadIdentityPools/test-gke-XXXXXX.svc.id.goog/subject/ns/<namespace-name>/sa/<service-account-name> \ --condition=None部署测试应用,测试应用的 pod 需要使用第 2 步对应的 namespace 和 service account 以及增加 nodeSelector 确保调度到启用了 Workload Identity Federation for GKE 特性的节点上:

apiVersion: v1 kind: Pod metadata: name: test-pod namespace: demo-ns spec: nodeSelector: iam.gke.io/gke-metadata-server-enabled: "true" serviceAccountName: demo-sa containers: - name: test-pod image: google/cloud-sdk:slim command: ["sleep","infinity"]待 pod running 后, 进入 test-pod 容器内,访问实例元数据服务获取 sts token,实际的业务应用使用的官方 SDK 也将使用类似的方式访问实例元数据服务获取用于请求云产品 API 的 sts token。

$ gcloud auth print-access-token

ya29.d.XXX

$ curl -H "Metadata-Flavor: Google" http://metadata.google.internal/computeMetadata/v1/instance/service-accounts/default/token

{"access_token":"ya29.d.XXX","expires_in":3423,"token_type":"Bearer"}

- 获取的 sts token 将具有前面第 2 步所关联的所有角色的权限.

$ gcloud container node-pools list --zone us-central1 --cluster test

NAME MACHINE_TYPE DISK_SIZE_GB NODE_VERSION

default-pool e2-medium 100 1.30.6-gke.1125000

$ curl -X GET -H "Authorization: Bearer $(gcloud auth print-access-token)" \

"https://storage.googleapis.com/storage/v1/b/demo-gke-workload-identity-federation/o"

{

"kind": "storage#objects"

}

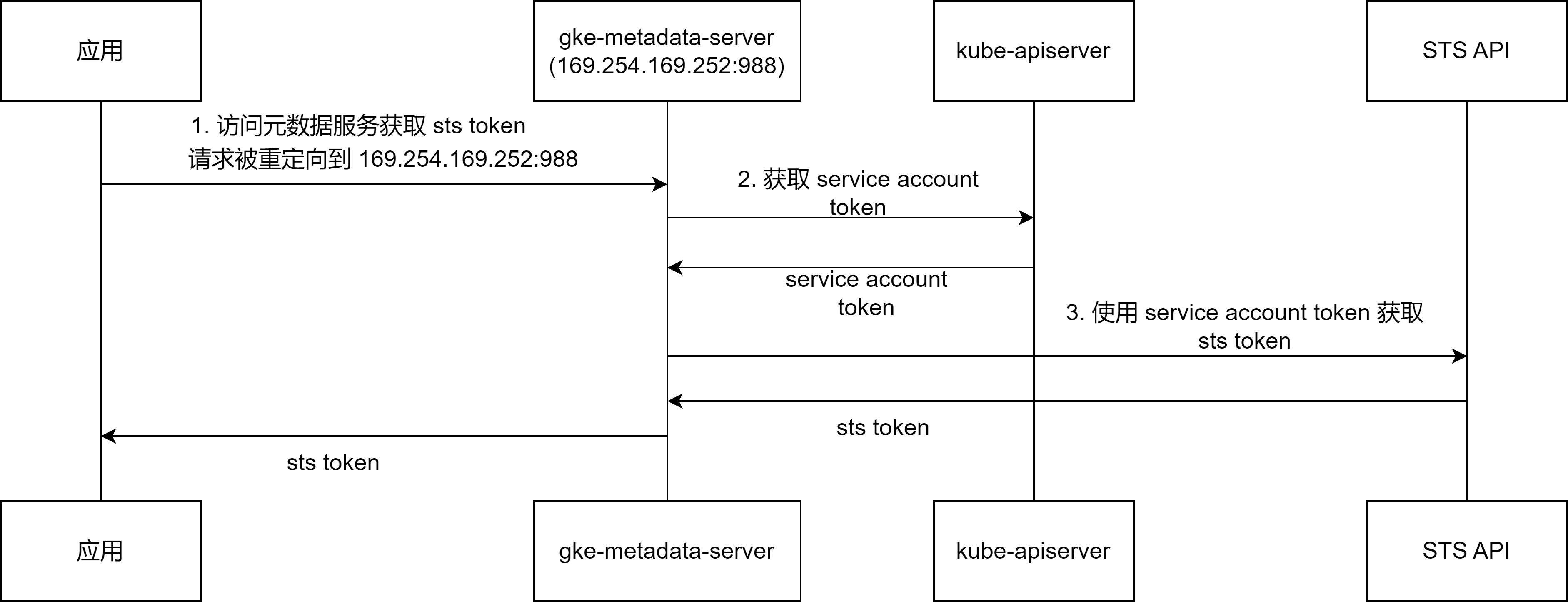

工作流程¶

Workload Identity Federation for GKE 特性的工作流程如下:

- 当应用 Pod 容器内的程序请求实例元数据服务(访问 http://metadata.google.internal ,实际是访问 http://169.254.169.254:80 ) 获取 sts token 时, 该请求将被重定向到 169.254.169.252:988。 169.254.169.252:988 是节点上部署的 gke-metadata-server 服务所监听的端口。

- gke-metadata-server 服务在收到请求后,将根据请求的 client ip 确定请求来自哪个 Pod, 然后再请求 apiserver 生成一个该 Pod 所使用的 service account 对应的 token。

- gke-metadata-server 将使用获取的 service account token 访问 GCP 的 STS API 获取一个 sts token, 最后 gke-metadata-server 将获取到的 sts token 返回给客户端。

这个流程中有几个关键的组件和信息需要重点关注,下面将逐个说明。

gke-metadata-server¶

当在集群维度或节点池维度启用 Workload Identity Federation for GKE 特性时,集群内将自动部署一个名为 gke-metadata-server 的组件。 该组件的工作负载 YAML 如下:

apiVersion: apps/v1

kind: DaemonSet

metadata:

annotations:

deprecated.daemonset.template.generation: "1"

labels:

addonmanager.kubernetes.io/mode: Reconcile

k8s-app: gke-metadata-server

name: gke-metadata-server

namespace: kube-system

spec:

minReadySeconds: 90

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: gke-metadata-server

template:

metadata:

annotations:

components.gke.io/component-name: gke-metadata-server

components.gke.io/component-version: 0.4.301

monitoring.gke.io/path: /metricz

creationTimestamp: null

labels:

addonmanager.kubernetes.io/mode: Reconcile

k8s-app: gke-metadata-server

spec:

containers:

- command:

- /gke-metadata-server

- --logtostderr

- --token-exchange-endpoint=https://securetoken.googleapis.com/v1/identitybindingtoken

- --workload-pool=test-gke-XXXXXX.svc.id.goog

- --alts-service-suffixes-using-node-identity=storage.googleapis.com,bigtable.googleapis.com,bigtable2.googleapis.com,bigtablerls.googleapis.com,spanner.googleapis.com,spanner2.googleapis.com,spanner-rls.googleapis.com,grpclb.directpath.google.internal,grpclb-dualstack.directpath.google.internal,staging-wrenchworks.sandbox.googleapis.com,preprod-spanner.sandbox.googleapis.com,wrenchworks-loadtest.googleapis.com,wrenchworks-nonprod.googleapis.com

- --identity-provider=https://container.googleapis.com/v1/projects/test-gke-XXXXXX/locations/us-central1/clusters/test

- --passthrough-ksa-list=anthos-identity-service:gke-oidc-envoy-sa,anthos-identity-service:gke-oidc-service-sa,gke-managed-dpv2-observability:hubble-relay,kube-system:antrea-controller,kube-system:container-watcher-pod-reader,kube-system:coredns,kube-system:egress-nat-controller,kube-system:event-exporter-sa,kube-system:fluentd-gcp-scaler,kube-system:gke-metrics-agent,kube-system:gke-spiffe-node-agent,kube-system:heapster,kube-system:konnectivity-agent,kube-system:kube-dns,kube-system:maintenance-handler,kube-system:metadata-agent,kube-system:network-metering-agent,kube-system:node-local-dns,kube-system:pkgextract-service,kube-system:pkgextract-cleanup-service,kube-system:securityprofile-controller,istio-system:istio-ingressgateway-service-account,istio-system:cluster-local-gateway-service-account,csm:csm-sync-agent,knative-serving:controller,kube-system:pdcsi-node-sa,kube-system:gcsfusecsi-node-sa,gmp-system:collector,gke-gmp-system:collector,gke-managed-cim:kube-state-metrics

- --attributes=cluster-name=test,cluster-uid=392f63049deXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX,cluster-location=us-central1

- --cluster-uid=392f63049ded410XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

- --sts-endpoint=https://sts.googleapis.com

- --token-exchange-mode=sts

- --cloud-monitoring-endpoint=monitoring.googleapis.com:443

- --iam-cred-service-endpoint=https://iamcredentials.googleapis.com

- --cluster-project-number=2318XXXXXXXX

- --cluster-location=us-central1

- --cluster-name=test

- --component-version=0.4.301

- --ksa-cache-mode=watchchecker

- --kcp-allow-watch-checker=true

- --enable-mds-csi-driver=true

- --csi-socket=/csi/csi.sock

- --volumes-db=/var/run/gkemds.gke.io/csi/volumes.boltdb

env:

- name: GOMEMLIMIT

value: "94371840"

image: us-central1-artifactregistry.gcr.io/gke-release/gke-release/gke-metadata-server:gke_metadata_server_20240702.00_p0@sha256:aea9cc887c91b9a05e5bb4bb604180772594a01f0828bbfacf30c77562ac7205

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

host: 127.0.0.1

path: /healthz

port: 989

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 3

name: gke-metadata-server

ports:

- containerPort: 987

name: alts

protocol: TCP

- containerPort: 988

name: metadata-server

protocol: TCP

- containerPort: 989

name: debug-port

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

host: 127.0.0.1

path: /healthz

port: 989

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

limits:

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

securityContext:

privileged: true

readOnlyRootFilesystem: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/lib/kubelet/kubeconfig

name: kubelet-credentials

readOnly: true

- mountPath: /var/lib/kubelet/pki/

name: kubelet-certs

readOnly: true

- mountPath: /var/run/

name: container-runtime-interface

- mountPath: /etc/srv/kubernetes/pki

name: kubelet-pki

readOnly: true

- mountPath: /etc/ssl/certs/

name: ca-certificates

readOnly: true

- mountPath: /home/kubernetes/bin/gke-exec-auth-plugin

name: gke-exec-auth-plugin

readOnly: true

- mountPath: /sys/firmware/efi/efivars/

name: efivars

readOnly: true

- mountPath: /dev/tpm0

name: vtpm

readOnly: true

- mountPath: /csi

name: csi-socket-dir

- mountPath: /var/run/gkemds.gke.io/csi

name: state-dir

- mountPath: /var/lib/kubelet/pods

mountPropagation: Bidirectional

name: pods-dir

- mountPath: /registration

name: kubelet-registration-dir

dnsPolicy: Default

hostNetwork: true

nodeSelector:

iam.gke.io/gke-metadata-server-enabled: "true"

kubernetes.io/os: linux

priorityClassName: system-node-critical

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: gke-metadata-server

serviceAccountName: gke-metadata-server

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

operator: Exists

- effect: NoSchedule

operator: Exists

- key: components.gke.io/gke-managed-components

operator: Exists

volumes:

- hostPath:

path: /var/lib/kubelet/pki/

type: Directory

name: kubelet-certs

- hostPath:

path: /var/lib/kubelet/kubeconfig

type: File

name: kubelet-credentials

- hostPath:

path: /var/run/

type: Directory

name: container-runtime-interface

- hostPath:

path: /etc/srv/kubernetes/pki/

type: Directory

name: kubelet-pki

- hostPath:

path: /etc/ssl/certs/

type: Directory

name: ca-certificates

- hostPath:

path: /home/kubernetes/bin/gke-exec-auth-plugin

type: File

name: gke-exec-auth-plugin

- hostPath:

path: /sys/firmware/efi/efivars/

type: Directory

name: efivars

- hostPath:

path: /dev/tpm0

type: CharDevice

name: vtpm

- hostPath:

path: /var/lib/kubelet/plugins/gkemds.gke.io

type: DirectoryOrCreate

name: csi-socket-dir

- hostPath:

path: /var/lib/kubelet/pods

type: Directory

name: pods-dir

- hostPath:

path: /var/lib/kubelet/plugins_registry

type: Directory

name: kubelet-registration-dir

- hostPath:

path: /var/lib/kubelet/plugins

type: Directory

name: kubelet-plugins-dir

- hostPath:

path: /var/run/gkemds.gke.io/csi

type: DirectoryOrCreate

name: state-dir

gke-metadata-server 组件具有如下特点:

组件的工作负载是一个 DaemonSet。

组件 Pod 使用 hostNetwork: true 以及 privileged: true 。

组件内的服务将监听 987, 988 以及 989 端口, 其中 988 端口将用于接收重定向过来的访问实例元数据服务的请求:

$ ss -atnlp |grep gke LISTEN 0 1024 *:987 *:* users:(("gke-metadata-se",pid=183706,fd=10)) LISTEN 0 1024 *:989 *:* users:(("gke-metadata-se",pid=183706,fd=12)) LISTEN 0 1024 *:988 *:* users:(("gke-metadata-se",pid=183706,fd=15))前面所说的 169.254.169.252 这个 IP 是本机 lo 的 IP 地址,所以 gke-metadata-server 监听的 988 端口 就包含了前面所说的 169.254.169.252:988 端口:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet 169.254.169.252/32 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever所有启用了 Workload Identity Federation for GKE 特性的节点在初始化的时候都会配置如下 nftables 规则, 确保从业务容器内发起的请求元数据服务 (http://metadata.google.internal , 169.254.169.254:80) 的流量都会被重定向到组件所监听的 169.254.169.252:988 端口:

table ip nat { chain PREROUTING { type nat hook prerouting priority dstnat; policy accept; counter packets 2143 bytes 169432 jump KUBE-SERVICES iifname != "eth0" meta l4proto tcp ip daddr 169.254.169.254 tcp dport 8080 counter packets 0 bytes 0 dnat to 169.254.169.252:987 iifname != "eth0" meta l4proto tcp ip daddr 169.254.169.254 tcp dport 80 counter packets 181 bytes 10860 dnat to 169.254.169.252:988 } }前面说到组件将通过 client ip 确定请求的来源 pod 以及会请求 apiserver 获取 service account,这里就涉及到组件是使用的什么凭证来访问 apiserver。 组件使用的是节点上 kubelet 的凭证来访问的 apiserver(前面的组件 YAML 中包含了挂载 kubelet kubeconfig 的配置)。 同时在部署组件时,将额外为 kubelet 的凭证授予 pods 和 serviceaccounts 的 get/list/watch RBAC 权限, 用于获取当前节点的 pod 以及 service account 信息:

--- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: components.gke.io/component-name: gke-metadata-server components.gke.io/component-version: 0.4.301 labels: addonmanager.kubernetes.io/mode: Reconcile name: gce:gke-metadata-server-reader roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: gce:gke-metadata-server-reader subjects: - apiGroup: rbac.authorization.k8s.io kind: Group name: system:nodes --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: annotations: components.gke.io/component-name: gke-metadata-server components.gke.io/component-version: 0.4.301 labels: addonmanager.kubernetes.io/mode: Reconcile name: gce:gke-metadata-server-reader rules: - apiGroups: - "" resources: - pods - serviceaccounts verbs: - get - watch - list如果 pod 使用的 service account 没有绑定 IAM 角色,pod 内应用访问元数据服务获取的 sts token 将会是节点默认的 google serivce account 的 sts token。

service account token¶

对于每个 pod 内程序发起的请求,gke-metadata-server 组件不是直接使用 pod 容器所挂载 service account token, 而是请求 apiserver 生成了一份新的 service account token。

容器所挂载的 service account token 的 payload 示例如下:

{

"aud": [

"https://container.googleapis.com/v1/projects/test-gke-XXXXXX/locations/us-central1/clusters/test"

],

"exp": ...,

"iat": ...,

"iss": "https://container.googleapis.com/v1/projects/test-gke-XXXXXX/locations/us-central1/clusters/test",

"jti": "...",

"kubernetes.io": {...},

"nbf": ...,

"sub": "system:serviceaccount:demo-ns:demo-sa"

}

组件请求 apiserver 所生成的 service account token 的 payload 示例如下:

{

"aud": [

"test-gke-XXXXXX.svc.id.goog"

],

"exp": ...,

"iat": ...,

"iss": "https://container.googleapis.com/v1/projects/test-gke-XXXXXX/locations/us-central1/clusters/test",

"jti": "...",

"kubernetes.io": {...},

"nbf": ...,

"sub": "system:serviceaccount:demo-ns:demo-sa"

}

可以看到,主要的区别是 aud 的内容不一样。

sts token¶

gke-metadata-server 组件将使用获取到的 service account token 访问 STS 的 token API 获取一份 sts token。

对应的请求示例如下:

:authority: sts.googleapis.com

:method: POST

:path: /v1/token?alt=json&prettyPrint=false

:scheme: https

x-goog-api-client: gl-go/1.23.0--20240626-RC01 cl/646990413 +5a18e79687 X:fieldtrack,boringcrypto gdcl/0.177.0

user-agent: google-api-go-client/0.5 gke-metadata-server

content-type: application/json

content-length: 1673

accept-encoding: gzip

{"audience":"identitynamespace:test-gke-XXXXXX.svc.id.goog:https://container.googleapis.com/v1/projects/test-gke-XXXXXX/locations/us-central1/clusters/test","grantType":"urn:ietf:params:oauth:grant-type:token-exchange","requestedTokenType":"urn:ietf:params:oauth:token-type:access_token","scope":"https://www.googleapis.com/auth/cloud-platform https://www.googleapis.com/auth/userinfo.email","subjectToken":"XXX.XXX.XXX","subjectTokenType":"urn:ietf:params:oauth:token-type:jwt"}

:status: 200

content-type: application/json; charset=UTF-8

vary: Origin

vary: X-Origin

vary: Referer

content-encoding: gzip

date: ...

server: scaffolding on HTTPServer2

content-length: 1061

x-xss-protection: 0

x-frame-options: SAMEORIGIN

x-content-type-options: nosniff

{"access_token":"ya29.d.XXX","issued_token_type":"urn:ietf:params:oauth:token-type:access_token","token_type":"Bearer","expires_in":3599}

其中请求 body 格式化后的内容如下:

{

"audience": "identitynamespace:test-gke-XXXXXX.svc.id.goog:https://container.googleapis.com/v1/projects/test-gke-XXXXXX/locations/us-central1/clusters/test",

"grantType": "urn:ietf:params:oauth:grant-type:token-exchange",

"requestedTokenType": "urn:ietf:params:oauth:token-type:access_token",

"scope": "https://www.googleapis.com/auth/cloud-platform https://www.googleapis.com/auth/userinfo.email",

"subjectToken": "XXX.XXX.XXX",

"subjectTokenType": "urn:ietf:params:oauth:token-type:jwt"

}

响应 body 格式化后的内容如下:

{

"access_token": "ya29.d.XXX",

"issued_token_type": "urn:ietf:params:oauth:token-type:access_token",

"token_type": "Bearer",

"expires_in": 3599

}

与 GKE Workload Identity 的区别¶

Workload Identity Federation for GKE 与 GKE Workload Identity 特性(又叫 link Kubernetes ServiceAccounts to IAM)的区别如下:

| 比较项 | GKE Workload Identity | Workload Identity Federation for GKE |

|---|---|---|

| 需要创建 Google service account (GSA) | 需要 | 不需要 |

| 需要配置 GSA 绑定的 IAM 角色 | 需要 | 需要配置 k8s service account (KSA) 绑定的角色 |

| 需要配置允许使用 KSA 扮演 GSA | 需要 | 不需要 |

| 需要在集群内配置 KSA 的 GSA 信息 | 需要 | 不需要 |

| 绑定角色时支持指定多个 KSA/GSA | 不支持 | 支持 指定多个,比如某个命名空间下的所有SA, 某个集群内的所有SA |

| 支持 project + namespace + KSA 维度授权 | 支持 | 支持 |

| 支持 cluster + namespace + KSA 维度授权 | 不支持 | 不支持 |

| 支持使用 hostNetwork 的应用 | 不支持 | 不支持 |

| 依赖部署 gke-metadata-server 组件 | 依赖 | 依赖 |

| 云产品API对获取的 sts token 的支持情况 | 几乎所有云产品 API 都支持 | 大部分云产品API都支持,部分云产品有限支持,少量云产品不支持 |

BTW, 虽然 Workload Identity Federation for GKE 方案的官方教程和文档中都是说的需要依赖 gke-metadata-server 这个组件, 但是从前面的内容中我们也可以看到:我们其实也可以在不安装 gke-metadata-server 组件的情况下,使用该方案。 具体来说就是,我们可以通过为应用 Pod 挂载所需的 service account token 然后在应用程序内直接访问 STS 提供的 Token API 的方式来解除对该组件的依赖。

参考资料¶

- Make IAM for GKE easier to use with Workload Identity Federation | Google Cloud Blog

- Authenticate to Google Cloud APIs from GKE workloads | Google Kubernetes Engine (GKE)

- About Workload Identity Federation for GKE | Google Kubernetes Engine (GKE) | Google Cloud

- Identity federation: products and limitations | IAM Documentation | Google Cloud

- Method: token | IAM Documentation | Google Cloud

- About VM metadata | Compute Engine Documentation | Google Cloud

Comments